Time for an even more generic way to describe disk formats...

18 July 2002

Introduction

This work-in-progress was a long time coming, but we thought it better to wait until the work was complete instead of giving bits and pieces of information here and there. We have tried to make it readable, but in doing so, it gets longer and longer...

As always, this WIP update relies on terminology covered in previous updates, but where sensible we have replicated the important information here.

Anyway....

Time for an even more generic way to describe disk formats!

A Mark Knibbs sent us a article from an old Amiga DevCon (Fig 1). This document contained an example disk description file for normal ADOS disks written in a language called “Freeform” that was developed by Magnetic Design Corporation for the Trace series of commercial mastering machines.

This language used in conjunction with the Trace machines is responsible for most of the copy protection schemes on the Amiga and the ST and probably other platforms. This is what Rob Northen would have used to describe his Copylock protection (as used on the Amiga and ST) for mass reproduction.

Looking at this disk description file we noticed it was striking similar to the description language we had already implemented. However, it was evident that the encodings were not implicit to the datatypes, they were abstracted from the data level. This way it is possible to describe quite different looking formats with minimal changes in their descriptor, so we decided to do the same and abstract the data from its encoding.

Unfortunately this meant a fairly sizable change, and one that would require all the currently known block descriptors to be modified, but it is a very important change as is described below.

What we had

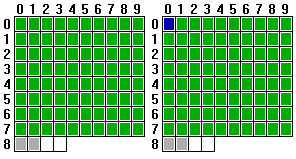

As you have probably realised by now, we are not into hacks or kludges. This new way of describing disks will be more in line with the stuff that could be generated by the Trace series of machines (Fig 2). The problem was that it was getting more and more annoying to add “features” to the descriptors for various reasons.

So basically, what would we do if a disk format is basically the same and only slight differences in things like different offset values, and most notably data encoding [1]? Well, previously we had to add more data field types. Simply speaking; we had to hard code more data field types [2] that were probably only used for a few games.

Note 1: Before, we have talked about data encodings at the physical level. Examples: MFM, MFM2, FM, GCR, etc. Also at a higher level, data encoding could be different ways of representing data on top of the physical encoding. Examples: Amiga continuous long words (odd/even pairs), Amiga block long words (odd/even blocks), RLE (Run Length Encoding), etc. So you might have a set of actual data bytes that are used by a game, encoded as Amiga continuous long words and then encoded by MFM on the disk. So basically, there can be (and usually are) several layers of encoding.

Note 2: A better explanation of data field types is later in this update.

This was pointless, error prone and would have ended up being much more work because we would have to manually support the many variations of a basic format. There was no point in doing anything else until this work was complete. The deciding factor though, was when we started tracing a lot of various games and all the little changes between them would always need new data items, just because of the encoding. It started to get annoying, but it was all that could be done with the old analyser. This would have also become very messy in a few months, and by that time there would have been be no way to address the problems.

What we did about it

Instead, we decided to further develop the “Encode” modifier type (an instruction in our own disk description language) to tell the system how the next n bits are encoded. So when the decoder part of the analyser reads the Encode command, it will process the next n bits as it is told without assuming anything. You might think of this as making the descriptors more “intelligent”.

This is easier to maintain and more generic and means we do not have to add a new encoding variant every time a different one is encountered for a certain data type. In fact this method was already used in many of the descriptors for the ADOS style scrambled headers but was not really used for anything else. Encoding was implicit for a certain data type - in other words, most data types encountered automatically selected an encoding. This is no longer the case.

To add an encoding, the only thing we need to do is define a new decoder for it in the Encode command logic - this is done only once each time a new encoding is found. The data items remain unchanged unless a new item is really needed to describe a format, but this will no longer happen very often if at all. This is a far cleaner way of doing it.

The main change is in the block descriptors. The encoding specific data types have been removed, and instead proceeded with an Encode command. This method allows easy addition of any encoding type (including physical MFM2, FM, GCR, etc. type or logical encodings) without any new data items being introduced.

Changes to the Analyser Core

This section is a rather complicated one and we decided to fill in a lot of information as so much has changed since the last update. This information should make it easier to understanding both the changes and the example at the end of the document. This section duplicates information from previous WIP updates along with the new material.

The best way to start is probably from the top level with something simple (a disk image) and go downwards to the most basic level of the analyser (the type of a data descriptor).

Just for reference, here is the structure of a disk “top-down” from a description perspective:

- Disk Images

- Format (Track) Descriptors

- Block Descriptors

- Data Descriptors

- Data Field Types

- Other

When something has changed about a section it is marked under a duplicate header.

Disk Images

The disk images going into the analyser are currently 3.5” disks that are dumped by contributors with the dumping tool. They normally contain 82 cylinders (0-81) or 84 cylinders (0-83) if the disk drive used can safely read up that far, but the image format itself may contain any number. It is unlikely many games will use cylinders that high, but it is best to be sure.

Each cylinder contains two tracks, one each side of the disk. We normally refer tracks as “cylinder x.head y”, where the head is 0 or 1. E.g. 40.0 is cylinder 40, head 0.

Disk Images - New or Changed

Nothing. The disk-dumping tool has not changed since the first public version. We did wrap it in some user-friendly menus but the tool itself has remained the same.

Format (Track) Descriptors

On the Commodore Amiga, each track can contain an entirely different format to the next as so we define disk formats on a track-by-track basis. The only constant factor on Amiga bootable disks is that track 0.0 (cylinder 0, head 0) should be able to decode in AmigaDOS format. This is what the Amiga expects when it begins loading the bootblock on this track and thus the format must conform to the standard format in order to do so. Note that it does not actually have to be standard, just properly readable that way.

As you might expect, this was the place to start when a cracker wanted to learn how data on the disk is stored (assuming it is not AmigaDOS) since it contains the instructions to load that data - at least in part.

In order to recognise a track, we need to describe it. When the analysation occurs it finds the format that best matches a track or indicates that the track is unknown. Any integrity information held by the track format is put into the track description and checked against to indicate any errors on the disk.

Format (Track) Descriptors - New or Changed

Bye bye descriptors...

Huge cleanup. Due to making the whole system more generic, all the old descriptors have been scrapped.

Re-implemented and New Formats

Formats now are named using their original names for reference, where the name is known. Thanks to Galahad for providing the missing names.

The titles listed using the specific formats are just examples, usually quite a few games share the very same format. In practice this means a lot more games now fully decode.

- AmigaDOS: standard

- AmigaDOS hdr data: Some programs store data in the OS part of the block header area.

- AmigaDOS trk mod: Modifed track number, used by many protections since most programs refuse to read such a track, including the OS itself.

- AmigaDOS, st mod: Another ADOS variant, the mark and track values are changed. Seen on Turbo Outrun.

- AmigaDOS, no info: The OS info part is not present in this ADOS variant. Found on Savage.

- Arc, Arc Developments: found on Forgotten Worlds.

- Arc, another format found on Predator 2, The Simpsons Bart vs Space Mutants, WWF European Rampage, RoboZone.

- Back to the Future 3, by Jim Baguley, Image Works

- Battle Squadron by Innerprise

- Bonanza Bros

- Core Design: on Premiere, Thunderhawk.

- Cosmic Bouncer by ReadySoft

- CHW: Loads of A-Lock variants, found on games GemX, Apidya, Turrican 3 (including highscore saves), Dugger, Rolling Ronny, Eliminator and many others. These are mostly by Germany oriented publishers, like Rainbow Arts, Starbyte, Magic Bytes, Linel, etc.

- DMA DOS: various DMA Design titles, found on Beast 3 and Lemmings so far.

- DMA DOS, a different one: Menace, Blood Money (encrypted).

- DMS, Digital Magic Software: Escape from Colditz, Fly Fighter.

- Elite Systems: A few different ones. Slight variation of data size on Commando, Ikari Warriors, Paperboy, ThunderBlade, Human Killing Machine, Gremlins 2, etc.

- Elite Systems, new version on Mike Read’s Pop Quiz & Mighty Bombjack.

- Firebird, Starglider 2, Virus.

- Firebird, a slightly different one for IK+.

- Firebird, a slightly different one for After Burner.

- Golden Goblins: on Circus Attractions, Grand Monster Slam.

- Great Giana Sisters by Time Warp (ready for when we find an original!)

- Gremlin: On Footballer of the Year 2, Gary Lineker’s Hot-Shot.

- Lankhor: Maupiti Island

- LGO, Logotron: variants on Archipelagos, Kid Gloves, Gauntlet 2, Golden Axe.

- MT variants: Factor 5 games, like Turrican, Turrican 2, Katakis (including highscore saves). One Turrican game has its highscore checksum routine messed up. It calculates the value with the wrong number of “data count” causing unmodified versions to fail the check.

- Nova, Novagen Software: Used on their titles like the Mercenary series with slight changes on the mark value and the number of marks. This format uses the advanced search function in the mark count and value fields so it can find out the real values by itself (details later).

- Ordilogic: Found on Unreal, Agony.

- Ork, a game by Psygnosis

- Rainbow Arts: Rock’n’Roll.

- Rainbow Arts: Another version on X-Out.

- PA, Ocean, by Pierre Adane: Variants found on Ilyad, Toki and Pang.

- Perihelion by Psygnosis

- PDOS (Rob Northen): Team 17/Probe titles, Red Barron, All Terrain Racing.

- PDOS varient: Bill’s Tomato Game, Lure of the Temptress.

- Prince of Persia by Br?derbund

- Protec: on Silkworm

- Protec variant on Subbuteo

- Psygnosis: Barbarian 2, Air Support, Shadow of the Beast 3 Intro disk (2 variants found on the same disk!).

- RATT: Games by Tony Crowther, like Captive.

- RL DOS, ReadySoft: Space Ace (1 & 2), Dragons Lair: Time Warp, Guy Spy.

- RPW, Sales Curve: Ninja Warriors

- Running Man by Grandslam

- Sega: Super Hang On

- Software Projects: Manic Miner

- SOS, Sensible: Software: found on games like Sensible Soccer, Megalomania.

- SFX, SpecialFX: variants on Batman The Caped Crusader, Head Over Heels, Midnight Resistance, The Untouchables.

- SS, Gremlin: used on Gremlin games, like Lotus 2, Lotus 3, Supercars 2, Utopia, Zool etc.

- Sword of Sodan by Discovery Software

- Sword of Sodan, quite a different version (probably EU/US version difference).

- Thalamus: Winter Camp

- THBB, The Bitmap Brothers: On various versions of Speedball.

- TSL: Digital Illusions games like Pinball Dreams.

- Universe: A game by Revolution

- Wizard Warz: A game by GO!

- Zeppelin: on Universal Warrior

- Zeppelin variation on Brides of Dracula

- ZZKJ, Rainbird

As you can see from above there are some formats that are similar. To reflect this, the formats that are only slight variations of another format were reorganized to use parameters for the changing data parts.

Some Statistics

- There are 88 formats supported so far.

- With well over 1000 disk images, at the point of 82 formats supported, only 70 of them did not decode! (not including custom protection tracks)

Note: Many of these formats are known to be re-used several times, and for others that is unlikely, but not impossible. When we think the format was not re-used we name it after the game, otherwise we name it after the author (if known) or company. If something makes it clear it was derived from another format, it will be normally named as a variant, but it may be still be marked as such after author/company info if nothing better comes along.

Aside

It is probably interesting to note that all of these formats were added in the last few weeks of this update, in parallel with other developments. You can probably now get an idea of how quickly a disk format can be added once it is reverse engineered, and therefore why this work was so important. “Gremlins 2” was about 5 minutes to do including tracing. This was because it is a variation on the existing Elite Systems format. There is nothing really fancy about the process - though it does require non-trivial pre-requisite knowledge.

As above, it is very straightforward to add a format by converting a traced loader. Everything else, like exploiting reconstruction possibilities, calculating sizes, etc. is all automatic. We are able to do this now, due to the analyser technology being mostly complete.

It does take much more development when new processes/methods are found (the “PDOS key finder” external process for example, which can be seen later). However, this will become less and less likely over time because of the limitations of Freeform - you cannot describe something if the data type was not in the language. They had to use standard elements to build a format - which is good news for us since it is less work.

It is harder to add “homemade” formats, as they could contain anything, but of course still completely possible. It is not likely that they are common though since they could not be duplicated - not with a Trace anyway and that was the industry standard. Perhaps this is why you sometimes hear of stories of developers doing a protection that was “so good, it could not be duplicated by the Trace machines” and so they had to modify it. Likely this is just because it could not be described in Freeform, which of course had nothing to do with how good the protection was.

Small Fix

Preferred format was ignored if the tested format had a density “hint”, for example if specified as being a long track.

Processing Shortcuts

A huge speed improvement was implemented. The analyser tries to decode a track first using any the formats found during the decoding of the previous tracks, basically a “MRU” (Most Recently Used) algorithm. It then tries the rest of the formats if the new result is not satisfactory. Since most of the games only use 2-3 formats on the same disk, this enhances decoding speed considerably.

Added an automatic escape point right before the decoding occurs that can cancel decoding when certain criteria are met. This prevents the rather complex decoding mechanism taking place or even initiated when it is not necessary. It even occurs before decoder pre-processor values are copied, since they take considerable space with certain formats and when you include the amount of decoding that is involved, it certainly makes sense.

All pre-processor data was then organised in a way that whenever a new block of decoding occurs, a simple blind memory copy completely sets all the variables, pointers etc. that are used through decoding to their initial value without further processing. This is similar to how you would clear screen memory to start from scratch because you do not care about the contents.

Block Descriptors

A track can contain one or more blocks of data; the exact number depends on the format. For example the AmigaDOS format track contains 11 blocks. As the name implies, a block descriptor is the representation of the block data format in the analyser.

Sync Values

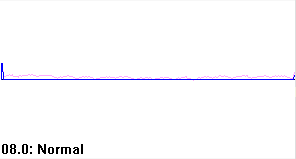

A “sync” is (in theory) not produced by the encoding used on the data area. Therefore it can mark the beginning (sometimes not really the beginning) of a new physical/logical data area, known as a block. The PC and AmigaDOS formats both use MFM “0×4489” as the sync pattern (Fig. 5), however there is the possibility that a custom Amiga format has many different sync values on the same track.

The process of sync scanning finds the possible sync values on a track and thus the block areas. So a format descriptor (for a track) is defined by its block descriptors. This indicates what kind of blocks are contained by it and any parameters that these blocks descriptors expect.

Sync Processing

This is only supported over the physical layer due to the fact that data analysation is tied to finding possible spots on the bitstream that may mark the start of real data. In fact, it is the only process to do this as all other methods ultimately use the marks that are found here.

The method of finding such spots is completely and absolutely dependent on the physical layer - and not related to any others at all. This is already a deeply involved process, and adding more features to it is not such a good idea at the moment. As sync scanning is the only physical layer dependent function, it is limited to only one physical format as no system is capable using different syncing methods at the same time anyway. Of course it is possible to use different syncing at various spots, just not at the same location.

Generic Comparators

As it was in the old analyser, generic comparators are supported for both real and inherited values.

- “<empty>” - we don’t care

- “<value>” - equal to value

- “] <value>” - less than or equal to value

- “[ <value>” - greater than or equal to value

Block Descriptors - New or Changed

All blocks for formats mentioned in the Format Descriptor section have been implemented, but there is not much point replicating these formats here. Instead lets talk about something that has changed that effects the block descriptors - sync processing.

Sync Scanning

Made a huge speed improvement on sync scanning. To “compensate” for this an optimisation that eliminated syncs that were “unlikely” to be used has now been removed. It is still much faster than the old version.

Sync Processing

The custom sync pre-processing (syncs whose value are not covered by the sync-set selected for processing) was moved to the pre-processor stage - where it would have been in the first place if there was a pre-processor stage in the original processing pipeline.

Data Descriptors

Format descriptors are defined by what block descriptors are used in them and whatever parameters are needed. In turn, blocks are described in terms of data descriptors and their parameters.

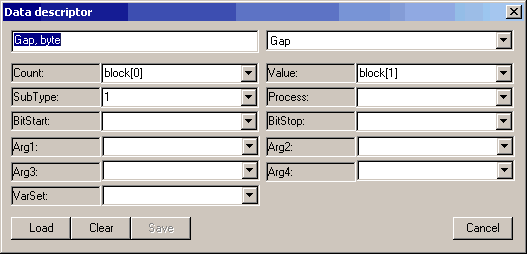

As covered in previous WIPs, “data descriptors” are user-defined descriptions for a chunk of data. They indicate the “data field type” along with parameters to nail down exactly what the chunk of data is, and what it is used for (Fig. 6). A data chunk or item is the smallest element of a format.

The example block descriptor for AmigaDOS (and indeed for any format) discussed later shows these “data descriptors” as user definable functions. If you were programming, think of it as defining your functions first (in our case this would be the data descriptors) and then using them in context (in our case in the block descriptors). So basically, all the names are arbitrary. The person who writes the descriptor chooses them, including the actual name of the format.

Data Descriptors - New or Changed

Generic Parameter Fields

Added new generic parameter fields for data descriptors, now the generic fields and their common meanings are:

- Type: The data field type to use, this is explained later.

- Count: Area number or data count.

- Value: Expected value.

- SubType: Depending on its context, this is information about the item, like encoder type or the basic type of data (byte, word, longword, etc.).

- Process: Processing requirement, like encryption.

- Bit Start, BitStop: These are values are always represented as 32 bits internally and it is possible to reference only a bitfield of them so the complete value is marked as 0, 31. But any valid field selected can now be processed.

- Arg1...4: Generic placeholders for arguments to be passed to (for example) external processes.

- VarSet: Selects a variable where decoded data is placed. If more than one piece of data is being decoded by the data element, the variable is the starting point of the array being filled with decoded data.

As before, all of these support values and inherited values (parameters) from both the format and the block descriptor level.

Data Field Types

The descriptor language is a typed system. Every data descriptor must indicate the type of data it represents, they are hard coded functions that define the “type” of each chunk of “data” on a block. Just like the C programming language where you might define a data type to be “byte”, “int” or “long”, here we define things as Mark, Sync, Data, etc.

The example block descriptor for AmigaDOS (and indeed for any format) shows the “data descriptors” as the user definable functions and not “data field types”. If this is confusing, but just think of it as:

- Data types indicate the “type” or “category” of the data chunk.

- Data descriptors indicate the type (as above), its size, what it is used for and other information specific to each type (most notably the parameters needed from the format and block descriptors).

Data Field Types - New or Changed

Current Set of Data Elements

Only some new data elements now remain in our disk description language. There may be a few others added later but the following set are enough to describe every format so far supported:

"Area start", "Area stop"

Sets the beginning and end of a logical data block. In the programming world a curly brace pair “{” / “}” is more natural (in C or Java for example) as areas are encapsulated (non-overlapping), however we need to be able to set overlapping areas by design. An example of another “language” that (used to) “allow” area overlapping like this is HTML.

A data block is an area made of one or more data elements. Their number and size are irrelevant as they are calculated at run-time and they may even change by what is defined as is the case for encoding etc.

After each we define the area number, which can be used to reference the area. This is any arbitrary value in the range 1...n.

"Area encode"

Info: An arbitrary number of layers can be applied to any area. While for readability the physical and logical layers are separated, in practice they are all applied to the same decoder chain in specified order after the reading of the bitstream has been done.

This is the encoding layer, with the following parameters:

- “count”: Area number where encoding applies.

- “value”: Physical encoding (MFM, MFM2, FM, GCR...).

- “subtype”: Logical encoding, like amiga odd/even long encoding; amiga odd/even long block encoding (AMG-2/3 in the Trace world).

- “process”: Any process to be applied over the data, like encryption.

"Mark"

Also known as “sync”, renamed to be more in line with Trace terminology. Treated as data, but encoding can be modified, and of course used to spot areas on a track in the first place. Generating these through the encoding layer is supported, like $4489 is $a1 with robbed clock bit #10. It has the following parameter:

“process”: An iterator containing command/value nibble (4 bit) pairs. It is easy to read as a hexadecimal value, for example “$1a” stands for robbed clock bit ($1.), bit#10 ($.a).

"Data"

Data area, all of which support:

- “count”: Count of data in its unencoded format, i.e. the real size, which completely depends on the encoding used.

- “value”: Expected value.

- “subtype”: Type of unencoded data, 1=byte, 2=word, 3=longword.

"Gap"

This is another data area, but its size may be altered via mastering - if it is allowed, and errors found after it can normally be ignored.

"EDC"

Better known as a checksum, but this is more in line with Trace terminology. A checksum is generated for the selected area, and stored on the spot of the command. Checksums can be nested and/or overlapped; the analyser resolves the dependencies and thus the correct order of calculating the values.

- “count”: Area number where the “process” is performed on original data. Due to various shortcuts in their code, some EDCs seem like they were originally performed on raw data but in reality they worked with un-encoded data.

- “value”: Starting value for the checksum “process”. Sometimes it is an arbitrary value, though most of the time 0.

- “subtype”: Type of unencoded data, 1=byte, 2=word, 3=longword.

- “process”: The checksum method.

"Ext"

A call to an external process during decoding, such as the decryption key finder algorithm for PDOS.

While these may seem only a handful of instructions (compared to the old and now abandoned scripting scheme), in practice they result in a very powerful scripting language, and considerably more complex to process than the original one used to be. This is especially considering the use of variables, ranges, areas, encoding layers, etc. All these data types support all the search modes applicable.

Integrity (Checksum) Methods

As we have talked about in previous WIP’s, any integrity information that is stored will be used to verify that the disk is authentic. As each game uses different disk formats it also means that they use different methods to verify that the data is okay.

The data type to support these different checksums is “EDC” as explained above. It is named such to be more in line with Trace terminology and the Freeform disk description language.

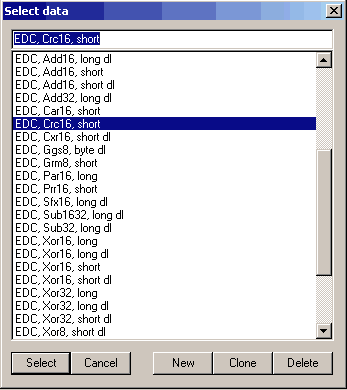

The following checksum processes have now been added to the EDC data type:

- Add16 (16 bit add)

- Add32 (32 bit add)

- Sub32 (32 bit subtract)

- Arithmetic 16 bit

- Arithmetic 16 bit (alternate)

- Crc16 (CCITT)

- Xor16 (16 bit xor)

- Xor32 (32 bit xor)

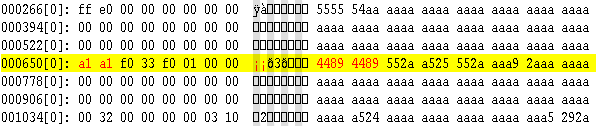

- + a ton of other strange ones (see Fig. 7)...

Several of the EDCs in Fig 7 are the same of course, but calculated on different layers (dl = data layer = fully decoded data), or stored with different data sizes (byte, word, longword).

Also added is an option to calculate checksums after the “transport layer” (we will talk about this layer later) or after all layers decoded, which is required by some formats.

New External Process

Added a new external process, a decryption key finder algorithm for PDOS.

Other Things New or Changed

Processing Shortcuts

Various things added to the analyser to skip processing where it is not needed.

- Added “escape” (ESC) option for block descriptor: block decoding can be explicitly set to be cancelled (skipping to next sync, thus trying to decode at the next position), if a data item does not score perfectly.

- Added “break” (BRK) option for block descriptor: format processing can be explicitly set to be cancelled, if a data item does not score perfectly.

- Added “break” (BRK) option for format descriptor: format processing can be explicitly set to be cancelled, if a block does not score perfectly.

All this means that both decoding of blocks at any point and the process of matching or decoding a format, can be aborted under certain criteria. The stage option at the format level marks the various processing levels as it is in the old analyser.

Data Encoding Layers

There are three different levels of encoding supported:

MFM dependencies at several places were removed, and added physical layer support for when we come to support other physical encodings.

- Physical: MFM, MFM2, FM, GCR, etc. (only MFM supported for now).

- Logical: Various encodings.

- Process: Any additional processes applied.

In reality these can be placed anywhere in the decoder chain - although most combinations do not make sense. Their number, order or combination is not restricted. Some arbitrary limits do apply for practical reasons, but could be changed easily if it was ever needed. The raw data is treated as a bitstream, which goes through a decoder chain to get the real values. The “Encode” command basically adds processes to this decoder chain.

Later, all the upper level constructs were finally in place (again) and work was started to add the new decoder.

Later still, the decoder chain code was created. As expected it was very complex, but it was the only way to make the data encoding transparent for all the higher-level functions.

Added MFM decoding support to decoder chain, but as a physical layer only at the moment. Later a logical layer version will be added too. The basic difference is that the physical layer (also known as the transport layer) knows exactly how to stream data from a dump, while a logical layer works with data that has already been streamed. There is much more involved (programming wise) in adding a physical layer to the decoder chain than adding a logical layer since logical layers do not care about streaming details, buffer under run/overrun, conditions, etc - all of these exceptions are already taken care of by the combined efforts of the transport layer and the decoder chain.

Added various checks related to this behaviour to the pre-processor stage, like ensuring the transport layer is the first encoding.

Added several Commodore Amiga specific encodings to the decoder chain, things like:

- Amiga continuous odd/even, long style (amg-2) encoding.

- Amiga block odd/even, long style (amg-3) encoding.

The references in brackets (amg-2, amg-3) are how Trace refers to these encodings.

More Sync Processing

Added support for mark processing/decoding. Firstly that was only the exact count, but the advanced search mode required support routines for things like saving / restoring the decoder chain to a known state and so it was added later the same day.

Basically the data types can find out the amount of data belonging to a particular data element by themselves. This is referred as “count” during the process, which can be an arbitrary known value (or of course a variable as well, if the value is resolved by the time of data processing) - this is the normal situation.

Data descriptions are either: “this data element is made of a known N number of $4489 marks” or “this data element is made of a known N number of mark recordings”. However there is an advanced search mode where the count is unknown, and this is found out by testing for known criteria; the count is set to the number of data elements that satisfy the selected condition. In this case, either the data should be encoded as a mark value (MFM recording rules, but not MFM encoding rules) or the raw (recorded) data should match the selected mark value. The decoder is then able to satisfy descriptions like, “this data element is made of unknown number of $4489 marks” or “this data element is made of unknown number of mark recordings”, as well as limiting the number to either a minimum or maximum value such as “this data element is made of unknown number of mark recordings, 3 consecutive marks at most”, etc.

As usual the above $4489 value is just selected as an example, it could be any arbitrary value, variable or parameter passed. In case of a GCR recording, it is obviously not 16 bits in raw format. To be precise, marks can be defined “properly” giving clocking details in the case of MFM for a byte value, or for convenience as a raw word value - the pre-processor takes care of all the conversions needed.

It is worth noting that most data types do not know or care about the encoding layer(s), so it is not an issue how or when the data is derived. In the old analyser all these details were implicit, and in fact implemented by the data types. This therefore resulted in an unmanageable number of data type variants. This is no longer the case of course!

The mark processing is slightly different regarding this matter since it must stream its data from the transport layer in order to identify a mark, since only the transport layer supports the recording details needed to perform exact raw data checking, and exact checking is necessary. As an example, the MFM $a1 mark in one encoding can be the familiar $4489 raw value (see the AmigaDOS example at the end), however selecting a different clocking for it means a completely different raw value, and any encoding resulting $a1 would match, while the transport layer makes the raw data stream accessible.

Parameters

The number of parameters that can be passed along from various descriptors (format → block or block → data) was changed to 8 - this should be more than enough. The previous 4 required “magic numbers” in some cases. As a consequence of this, the associated editors have changed too.

Read-only Parameters (constants)

Added access to protected parameters from the analyser core. These are read-only variables at preset positions that ease the writing of the descriptors, (such as cylinder, track, head number, etc.) being analysed. These values can be used by the descriptors without any of the previous “magic” involved due to the access being made standard. They are still read-only.

Variables

Added support for variables that can be set by values read from the actual data. Their lifetime is the processing of one track format and they can of course be used in generic comparisons. Variables are especially useful for formats where the data stores block size, gap size etc. This is usually hardware oriented formats like Atari/PC, which are common to the Amiga, used as protection and a disk format.

Pre-processors

Moved the various pre-processors into one logical block, so they can use data derived by various pre-processor modules instead of assuming such data is unknown.

Bit Orientated Processing

The various parts of the analyser have been reorganised to fully support bit-oriented processing. This affected quite a few parts of the program, and various parts needed to be updated.

Descriptor Integrity Checks

Due to the fact that descriptors and their relationship to other things may get very complex, an integrity checking function was added that could detect most of the definition errors that can occur in a block, the format or data. The “check” (CHECK) option calls the pre-processor to highlight either block/data definition problems in the block being edited, or to find a bad block in the selected format. This can be thought of like an average compiler doing the syntactic and semantic analysis stages.

Data Merge

A new syncset filtering option was added, “DataMerge”. Normally when marks are filtered so that a data block must follow a mark and marks are to be found in a group, only the last mark is selected by the filter, since that is the only one that is followed by a data block. The data merge option tags all such marks, so no special tricks are needed to find each one of them.

Added data merging detection to the pre-processor. There are encodings - typically the ones that store parts of the same data value in separate blocks - where the whole area must be decoded completely to produce the expected data. However if more than one adjacent data element shares such an encoding, that means all of their size should be merged to get the correct size of the area (or offset in data part blocks) to be decoded. This kind of behaviour is needed at least by the Elite Systems format, quite possibly some forthcoming formats as well.

Setting Variables Explicitly

It is now possible to do simple calculations and set variables with the result during decoding. This is needed since some formats calculate the correct track number used by the format.

Taking Preferred Format

Some formats are ambiguous, (mostly due to parity) and in fact might only differ in size, therefore the parity itself cannot differentiate the format among its relatives. Such twin formats are Digital Magic and ZZKJ. When a format is manually selected on the starting track of analysation, the analyser prefers the selected format if it decodes properly for convenience (changing manually will not be needed later).

An Example: AmigaDOS

Okay, now we have gone through all the background on what has happened over the last couple of months, lets see an example of exactly how we describe a disk format. What follows is AmigaDOS format and block descriptors written for the analyser.

AmigaDOS Format Descriptor

Reminder: A format contains one or more block descriptors.

The AmigaDOS format has 11 blocks within each track.

AmigaDOS block: , #2, *1

AmigaDOS block: 1, #2, *1

AmigaDOS block: 2, #2, *1

AmigaDOS block: 3, #2, *1

AmigaDOS block: 4, #2, *1

AmigaDOS block: 5, #2, *1

AmigaDOS block: 6, #2, *1

AmigaDOS block: 7, #2, *1

AmigaDOS block: 8, #2, *1

AmigaDOS block: 9, #2, *1

AmigaDOS block: 10, #2, *1

Lets take just one of these lines and break it down...

AmigaDOS block: , #2, *1

Essentially this statement passes 3 parameters to the block descriptor defined, so in our case this is the “AmigaDOS” descriptor and the parameters are defined below in the order they appear.

- “” is the block number.

- “#2” is a system variable (automatically set by the analyser), indicating the current track number.

- “” is used as the header data value.

The final “*1” is the weighting factor of the block’s “score” when calculating it for all the blocks found in a format descriptor. This basically contributed to how well a track matches this format if this block exists. In this case each block is weighted with an “1x” factor meaning that all blocks have equal weighting when deciding if a track being analysed matches this format.

AmigaDOS Block Descriptor

Above we defined the format descriptor of how “AmigaDOS” track is comprised of 11 “AmigaDOS” blocks. Now we need to define the “AmigaDOS” block, which will be used by the format descriptor.

What follows is a listing of how an AmigaDOS block is defined at the lowest level.

Encode: 1, 1

Encode: 2, , 1

Encode: 3, , 2

_Area start: 1

Gap, byte: 2, *1

Mark, byte: 2, $a1, $1a *1 < ESC >

_Area start: 2

Data, byte: 1, $ff *1

Data, byte vf1: 1 *1

Sector, byte: 1 *1 < ESC >

Data, byte: 1, ]11 *1

Data, byte vf2: 16 *1

_Area stop: 2

EDC, Xor16, long: 2, *2

EDC, Xor16, long: 3, *3

_Area start: 3

Data, byte: $200 *1

_Area stop: 3

_Area stop: 1

Data Descriptor Definitions

These are defined in another part of the analyser, but lets just describe how the particular ones used in the AmigaDOS block descriptor are formed. Note that the description of the column headers is defined earlier in the WIP update.

Data descriptors are named. In the example above, the names are in blue - that is - everything before the colon. They are our block descriptor language functions. They refer to their associated descriptor; therefore it is probably best defining what is in the data descriptors mentioned here before we look at the descriptor as a whole.

You may notice there are some columns missing here (i.e. BitStart, BitStop, and the miscellaneous columns), this is just because they are not used in this example.

Key:

BP = Uses specified Block Parameter

BF = Uses specified Format Parameter

n/a = Column not applicable for this data chunk

| Function | Type | Count | Value | SubType | Process | VarSet |

|---|---|---|---|---|---|---|

| Encode | Area encode | BP #0 | BP #1 | BP #2 | BP #3 (unused in example) | BP #4 (unused in example) |

| _Area start | Area start | BP #0 | n/a | n/a | n/a | n/a |

| Gap, byte | Gap | BP #0 | BP #1 | 1 (i.e. byte) | n/a | n/a |

| Mark, byte | Mark | BP #0 | BP #1 | 1 (i.e. byte) | BP #2 | n/a |

| Data, byte | Data | BP #0 | BP #1 | 1 (i.e. byte) | n/a | BP #2 (unused in example) |

| Data, byte vf1 | Data | BP #0 | FP #1 | 1 (i.e. byte) | n/a | BP #2 (unused in example) |

| Sector, byte | Data | BP #0 | FP #0 | 1 (i.e. byte) | n/a | n/a |

| Data, byte vf2 | Data | BP #0 | FP #2 | 1 (i.e. byte) | BP #2 (unused in example) | n/a |

| _Area stop | Area stop | BP #0 | n/a | n/a | n/a | n/a |

| EDC, Xor16, long | EDC | BP #0 | BP #1 | 3 (i.e. longword) | 1 (i.e. Xor16 method) | n/a |

As you can see, many of these are the very same types, they just have parameters set to different values or taken from the format or block descriptors at runtime. Lets have a quick look where this parameter passing is used in the format descriptor.

The format for a line in the format descriptor is:

[Name]: [Parameter #0], [Parameter #1], ... *[weight]

A line in the AmigaDOS format descriptor is:

AmigaDOS block: , #2, *1

Lets convert that to it’s logical form for simplicity (for AmigaDOS blocks):

[AmigaDOS block]: [block], [track], [header value] *1

All these parameters are passed down to the block and data descriptors. These lower level descriptors now have access to Format Parameter #0, Format Parameter #1 and Format Parameter #2. So now lets have a look at the data descriptors that access them.

- Format Parameter #0: set to 0...10, used by the “Sector, byte” data descriptor, i.e., we search for blocks 0 to 10.

- Format Parameter #1: set to #2, used by the “Data, byte vf1” data descriptor. The #2 is actually a variable, hence the value in the data descriptor first resolves as Format Parameter #1, that turns out to be a reference to a variable at position #2.

- Format Parameter #2: set to 0, used by the “Data, byte vf2” data descriptor, which is referenced by the OS info block, i.e., we want all bytes of the OS info to be 0.

You might wonder we did it this way instead of just using fixed values. The reason is that many games use slight differences in the format descriptor, which usually causes an AmigaDOS track to be unreadable as most programs refuse to read such a track. These variations can be seen in the formats “AmigaDOS, trk mod” and “AmigaDOS, hdr data” where we can use exactly the same AmigaDOS block descriptor, just a different AmigaDOS format descriptor with a few of the parameters changed. Any changes only need to be done once.

AmigaDOS Block: Line by Line Explanation

Now the block descriptor is still not going to make a lot of sense without some proper explanation, so lets go through it line-by-line.

AmigaDOS Block Descriptor

Encode: 1, 1

Encode: 2, , 1

Encode: 3, , 2

_Area start: 1

Gap, byte: 2, *1

Mark, byte: 2, $a1, $1a *1 < ESC >

_Area start: 2

Data, byte: 1, $ff *1

Data, byte vf1: 1 *1

Sector, byte: 1 *1 < ESC >

Data, byte: 1, ]11 *1

Data, byte vf2: 16 *1

_Area stop: 2

EDC, Xor16, long: 2, *2

EDC, Xor16, long: 3, *3

_Area start: 3

Data, byte: $200 *1

_Area stop: 3

_Area stop: 1

Line by Line Explanation

First we define Area 1 (first parameter) to be encoded as MFM (second parameter). The whole block is covered by Area 1, and therefore all data elements are set to be MFM encoded.

//Note:// These encode commands are defining the settings for the areas that are defined throughout this block descriptor. They can be anywhere, but they are nice and clear at the top.

Encode: 1, 1

Next Area 2 is encoded as Amiga continuous longwords (odd/even pairs) as defined by the third parameter. The second parameter is blank as that defines the physical encoding and as Area 1 encapsulates this area, it is already MFM encoded data. This area is the next layer of encoding above MFM and is the odd/even pairs.

Encode: 2, , 1

Area 3 encoded as Amiga block longwords (odd/even blocks) as defined by the third parameter. As for Area 2, this area is encapsulated by Area 1 and therefore already MFM encoded data. After that it is encoded as odd/even blocks.

Encode: 3, , 2

Start Area 1. Just marking an area to cover all the data to encode.

_Area start: 1

There are then 2 bytes, with the value of 0 defining a gap. Weight 1x.

Gap, byte: 2, *1

Next is another 2 bytes, the mark (sync), value $4489. Weight 1x. Skip decoding to next mark if it does not match.

Mark, byte: 2, $a1, $1a *1 <ESC>

Lets explain those values:

The $a1 is $44a9 in MFM, but since we already encapsulated by Area 1 (defining MFM encoding), we give the unencoded value. The $1a is an iterator saying to rob/clear (clock) bit #10.

Bits are numbered from the left (as a bitstream or most display memories do), not from the right, starting at 0. Hence clearing bit #10 from $44a9 is $4489.

Next is where Area 2 starts. This is the header area.

_Area start: 2

Amiga format specific byte of constant data, the value of which is $ff. Weight 1x.

Data, byte: 1, $ff *1

Then comes the track number, 1 byte of data, with the value taken from Format Parameter #1. Weight 1x.

Data, byte vf1: 1 *1

The sector number comes next and is 1 byte. The sector number is taken from Format Parameter #0. Weight 1x. Skip decoding at this mark position if does not match. So basically go to the next mark and try again from the beginning of the block descriptor.

Sector, byte: 1 *1 <ESC>

Next is a gap distance of 1 byte, the value must be ⇐ 11. Weight 1x.

Data, byte: 1, ]11 *1

Now we come to the OS information block which is 16 bytes of data, with the value taken from Format Parameter #2. Weight 1x.

Data, byte vf2: 16 *1

End Area 2.

_Area stop: 2

The header checksum is next, which is a Xor16 type checksum on Area 2. Starting value=0. It is weighted by a factor of 2 so the score will be worse if the checksum calculated on the header does not match the value stored here.

EDC, Xor16, long: 2, *2

The data checksum next, which is an Xor16 type checksum on Area 3. Starting value=0. It is weighted by a factor of 3 so the score will be worse if the checksum calculated on the data does not match the value stored here, i.e. the integrity of data area is the most important.

EDC, Xor16, long: 3, *3

Start Area 3. This is the data area.

_Area start: 3

The actual data, 512 ($200) bytes of it, which can be any value. Weight 1x.

Data, byte: $200 *1

End Area 3.

_Area stop: 3

Lastly, end Area 1.

_Area stop: 1

![[logo]](/lib/tpl/sps/layout/header-logo.png)

![[motto]](/lib/tpl/sps/layout/header-motto.png)